.svg)

The only AI-SRE platform that helps reduce observability costs

Background agents run LLM-optimized scripts ("tools") to collect data from your environment and turn it into insights.

It feels like using ChatGPT...

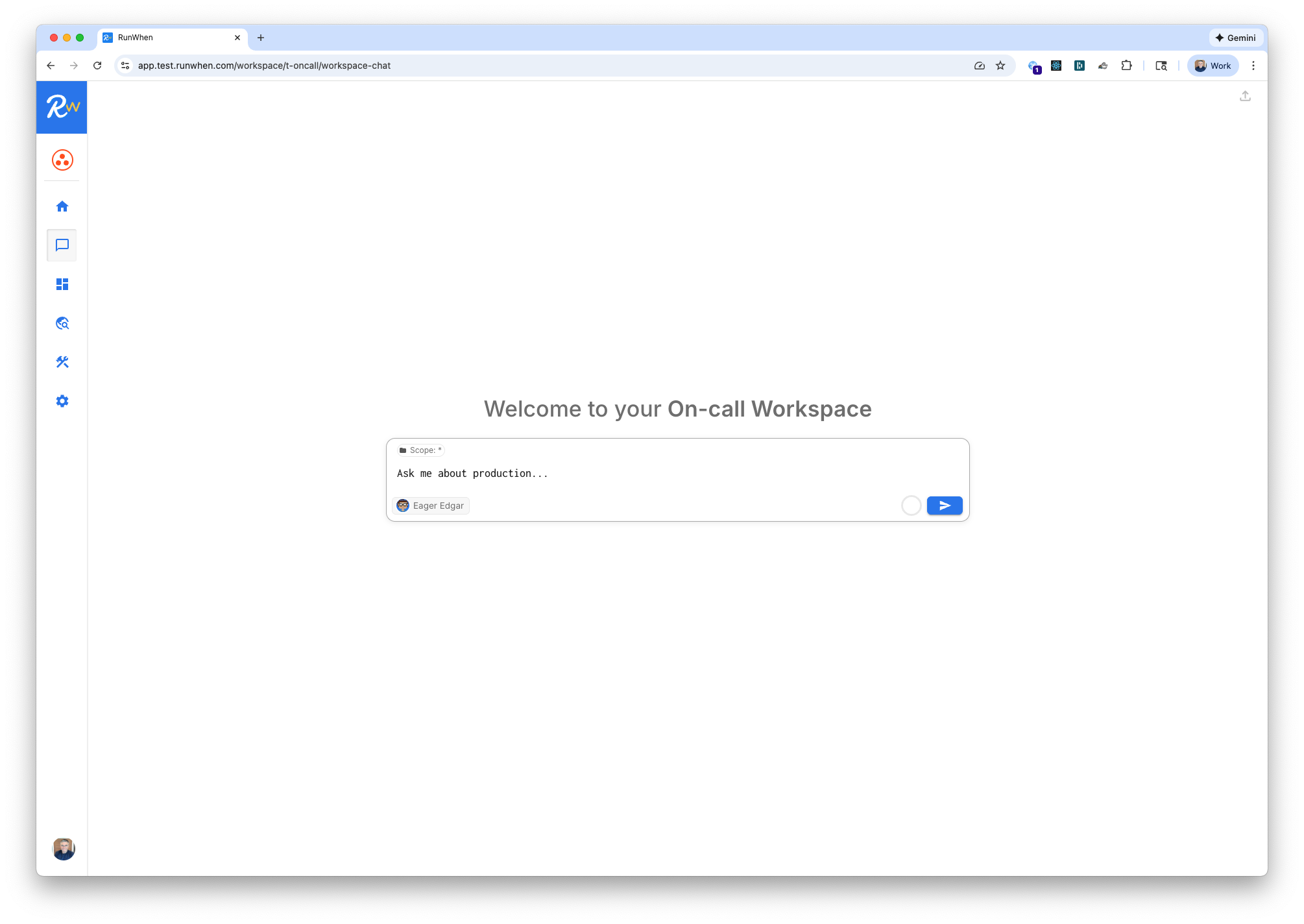

...but it uses "tools" to fetch real-time answers from infrastructure, platform, applications, data,

logs, alerts, automation, cost, standard operating procedures, runbooks,...

ChatGPT takes a question and searches the web in the background, using the results to generate an answer.

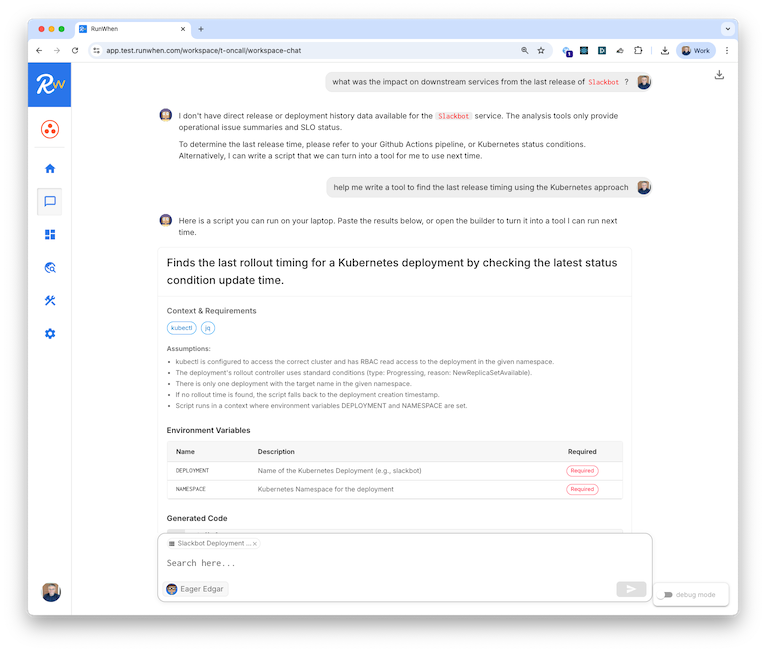

RunWhen takes a question and runs LLM-optimized scripts ("tools") in the background, using the output to generate an answer.

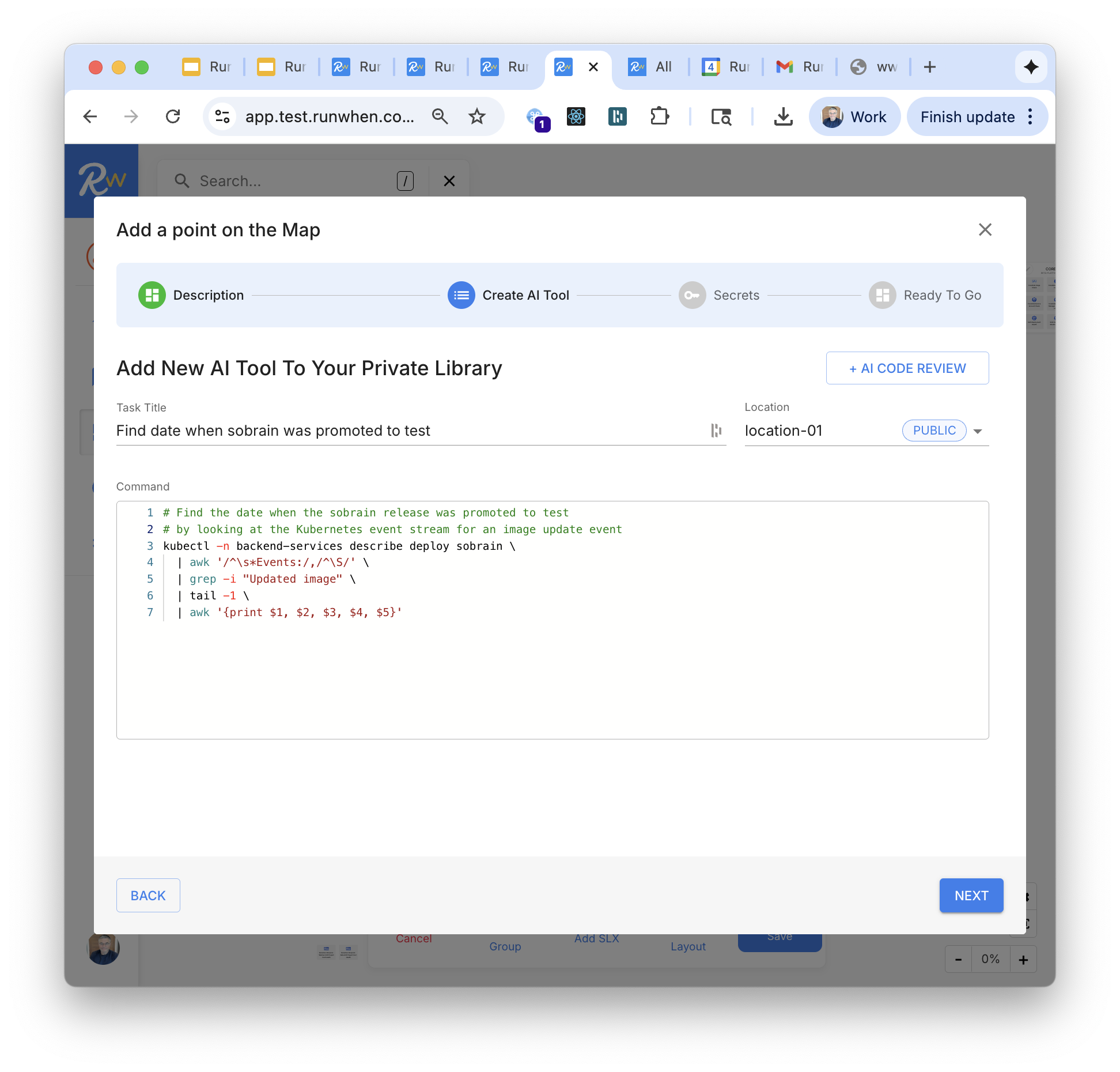

any engineer can write a new tool

Tools are just LLM-optimized scripts. Our FDEs will work with your team to build 30 new tools in 30 days in addition to the tools that come out of the box.

every engineer can answer a question

Tools run in the background or in the foreground, gathering context from your environment to answer questions.

Your first thousand tools in minutes

Our installer configures thousands of tools from our library for your environment.

Production ready out of the box.

30 new tools

in 30 days

In addition to the tools that come out of the box, our FDEs work with your team to build "30 new tools in 30 days" integrating with your apps, infra and data as part of a PoC.

Getting started with RunWhen

FOREGROUND AGENTS

Ask questions for root cause analysis, configuration, cost, remediation and other topics.

The platform will suggest the tools to run or pull insights from the database of prior tool runs.

BACKGROUND AGENTS

Agents are constantly running tools in the background, processing the results into insights.

Ask about what happened yesterday, or connect insights to tools for notification, remediation, etc.

30 NEW TOOLS IN 30 DAYS

Our FDEs or our partners will work with your team to build new tools to add data you want from your infra, apps, data and workflows to each agent's context window.

You are in control.

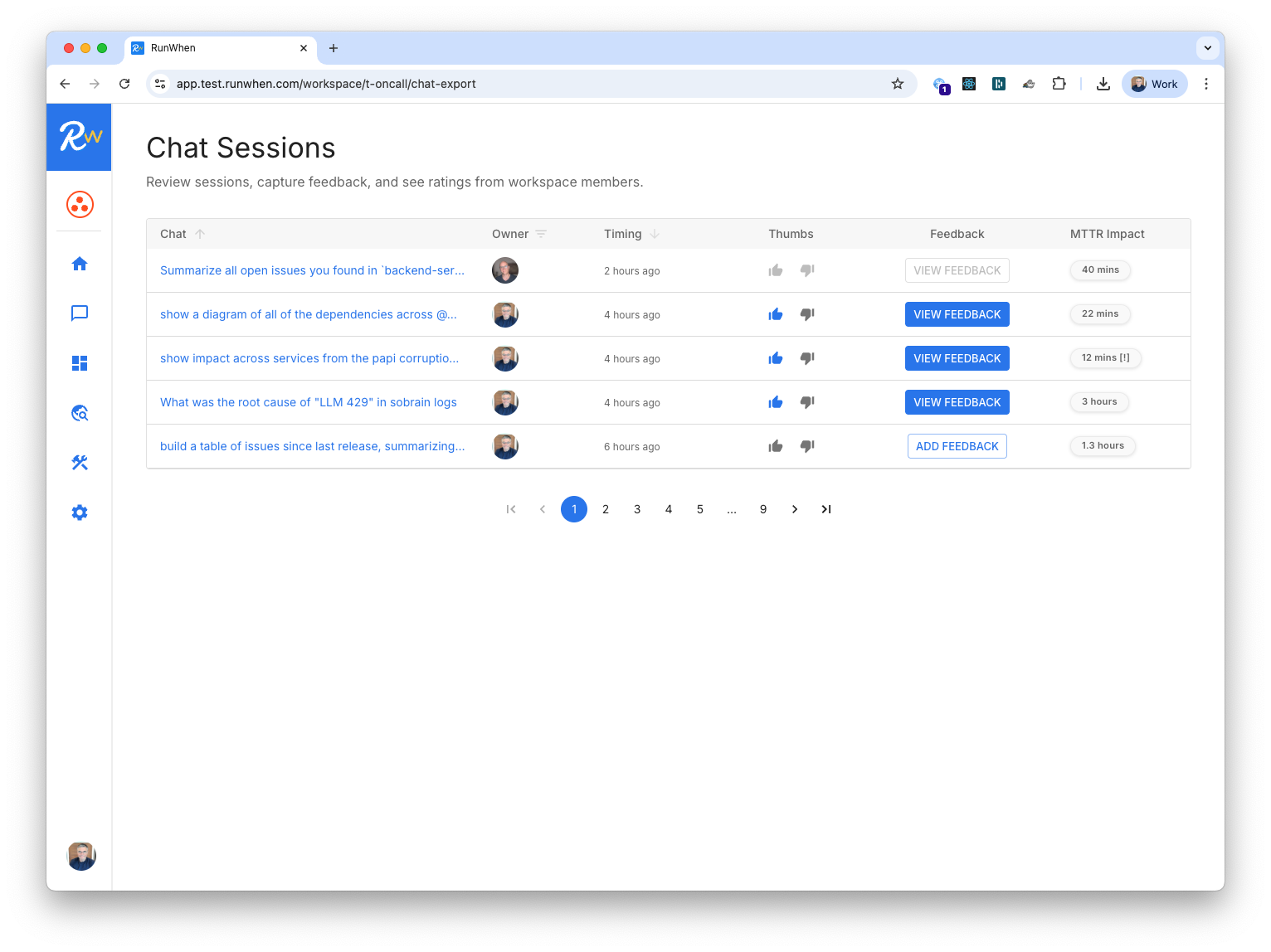

THUMBS UP?

Get AI-enhanced feedback from your users, showing where new tools should be prioritized for investigation, remediation, reporting or other uses.

Product management built in by design.

Can my team deploy RunWhen?

We work in the strictest financial services, health care and government environments in the industry

Need help with a business case?

Our team can help you build a business case for production environments, non-production environments, or both.

We typically do this after a 30 day PoV so we can use real production data in your environment.

A (paid) community?

We are helping experienced engineers become AI SRE consultants and paid community contributors.

.png)

.png)

.svg)